PyTorch tricks

Table of Contents

1. Categorical Reparameterization with Gumbel-Softmax (create categorical variables in neural networks) #

- Background:

- Discrete variables are important in neural networks. E.g. discrete variables have been used to learn probabilistic latent representations that correspond to distinct semantic classes.

- Contributions

- Gumbel-Softmax, a continuous distribution on the simplex that can approximate categorical samples.

- this paper provides a simple, differentiable approximate sampling mechanism for categorical variables that can be integrated into neural networks and trained using standard back-propagation.

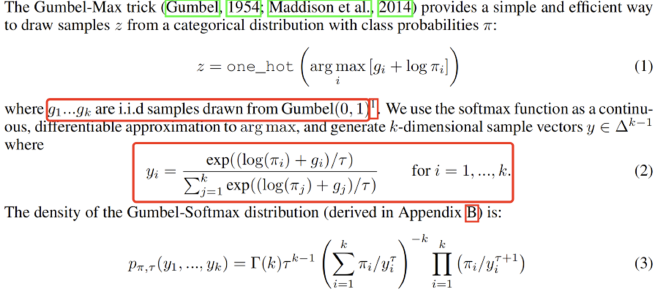

The Gumbel-Softmax Distribution #

Gumble Softmax Estimator #

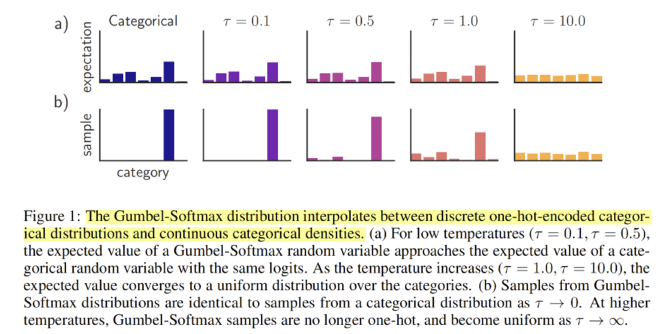

For learning ,there is a tradeoff between small temperatures, where samples are close to one-hot but the variance of the gradients is large, and large temperatures, where samples are smooth but the variance of the gradient is small.

In practice, we start at a high temperature and anneal to a small but non-zero temperature.

- Incorporates Noise: Gumbel-Softmax incorporates Gumbel noise into the input, which allows the model to explore a variety of outputs, making it stochastic as opposed to the deterministic nature of Softmax.