Publications

Nothing truly valuable arises from ambition or from a mere sense of duty;

it stems rather from love and devotion towards men and towards objective things.

-- Albert Einstein

2025

-

Daiwei Chen, Yi Chen, Aniket Rege, Zhi Wang, and Ramya Korlakai VinayakIn The Thirteenth International Conference on Learning Representations, 2025

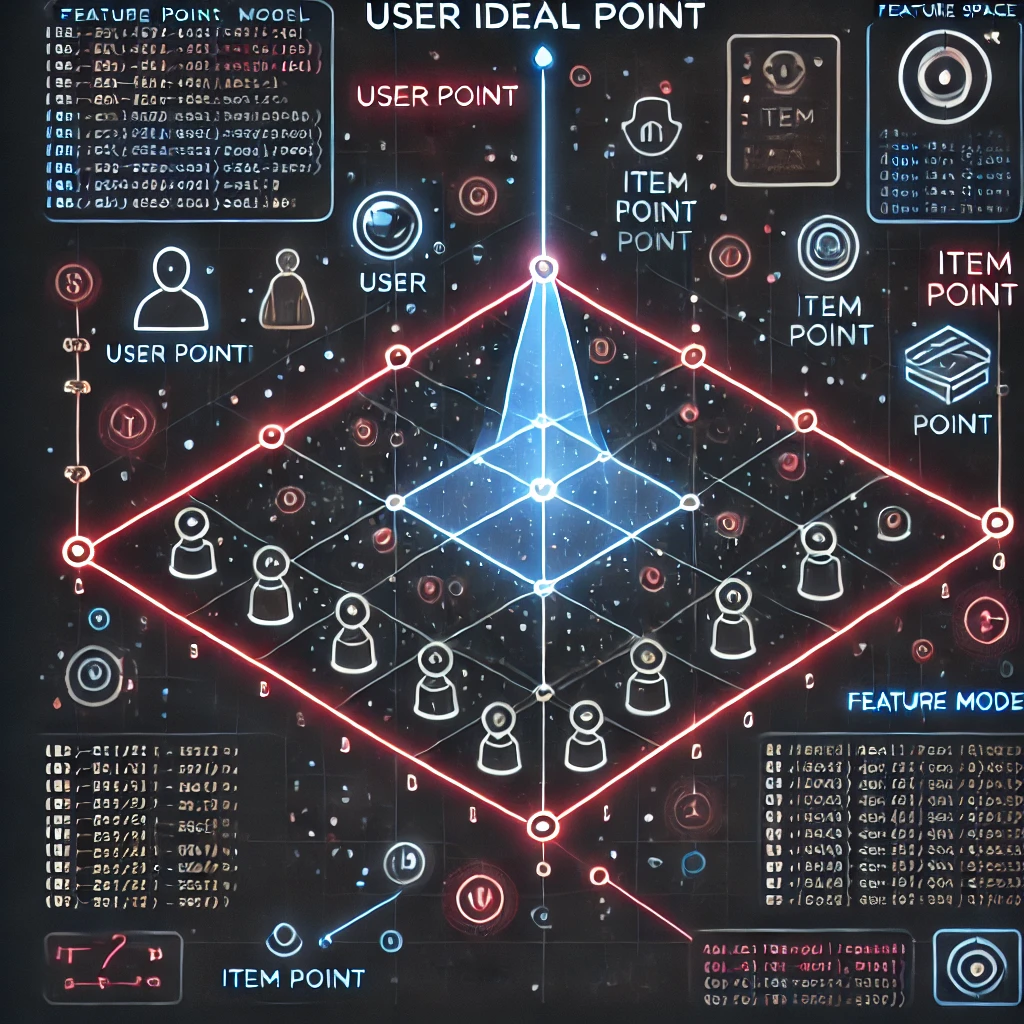

Daiwei Chen, Yi Chen, Aniket Rege, Zhi Wang, and Ramya Korlakai VinayakIn The Thirteenth International Conference on Learning Representations, 2025Foundation models trained on internet-scale data benefit from extensive alignment to human preferences before deployment. However, existing methods typically assume a homogeneous preference shared by all individuals, overlooking the diversity inherent in human values. In this work, we propose a general reward modeling framework for pluralistic alignment (PAL), which incorporates diverse preferences from the ground up. PAL has a modular design that leverages commonalities across users while catering to individual personalization, enabling efficient few-shot localization of preferences for new users. Extensive empirical evaluation demonstrates that PAL matches or outperforms state-of-the-art methods on both text-to-text and text-to-image tasks: on Reddit TL;DR Summary, PAL is 1.7% more accurate for seen users and 36% more accurate for unseen users compared to the previous best method, with 100× less parameters. On Pick-a-Pic v2, PAL is 2.5% more accurate than the best method with 156× fewer learned parameters. Finally, we provide theoretical analysis for generalization of rewards learned via PAL framework showcasing the reduction in number of samples needed per user.

@inproceedings{chen2025pal, url = {https://openreview.net/forum?id=1kFDrYCuSu}, title = {<a href="https://openreview.net/forum?id=1kFDrYCuSu" target="_blank">{PAL}: Sample-Efficient Personalized Reward Modeling for Pluralistic Alignment</a>}, booktitle = {The Thirteenth International Conference on Learning Representations}, author = {Chen, Daiwei and Chen, Yi and Rege, Aniket and Wang, Zhi and Vinayak, Ramya Korlakai}, year = {2025}, eprint = {2406.08469}, archiveprefix = {arXiv}, primaryclass = {id='cs.LG' full_name='Machine Learning' is_active=True alt_name=None in_archive='cs' is_general=False description='Papers on all aspects of machine learning research (supervised, unsupervised, reinforcement learning, bandit problems, and so on) including also robustness, explanation, fairness, and methodology. cs.LG is also an appropriate primary category for applications of machine learning methods.'} }

2024

-

Daiwei Chen*, Weikai Chang*, and Pratik Chaudhari2024

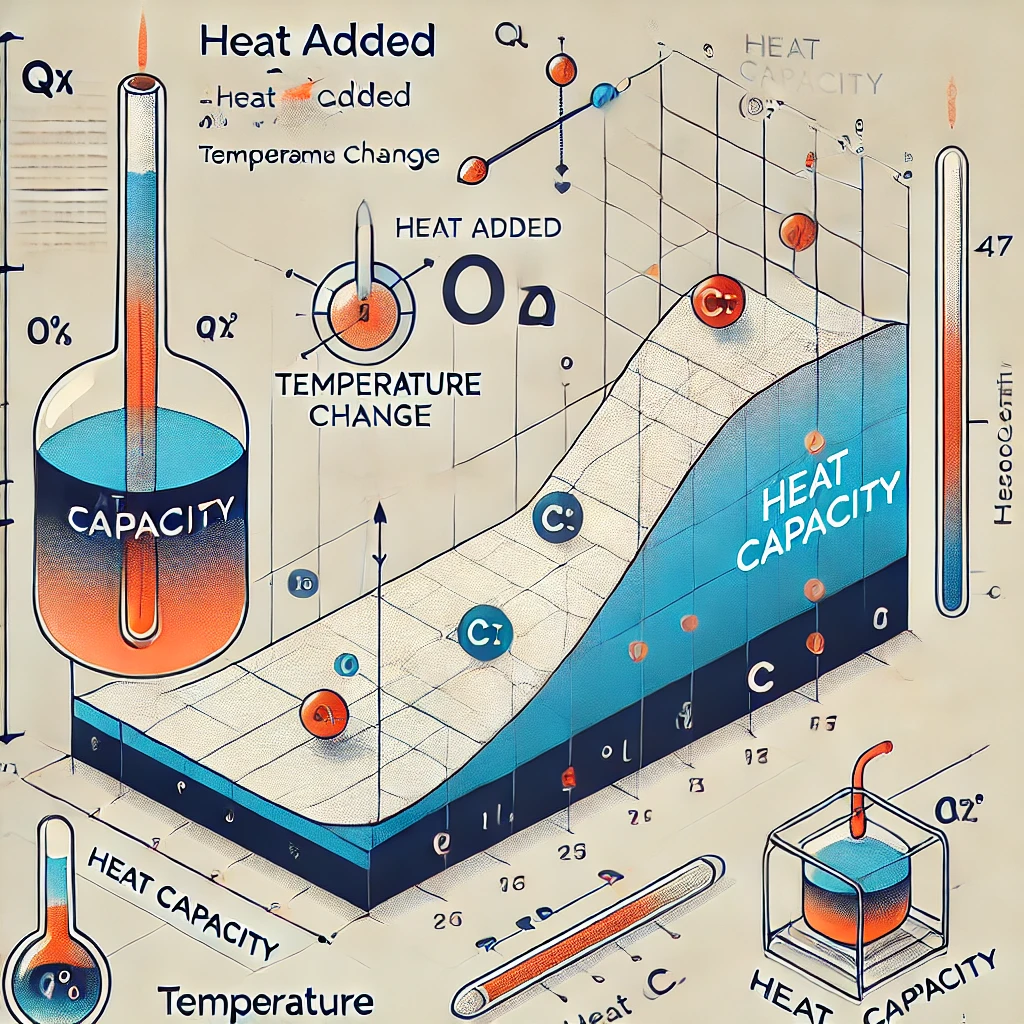

Daiwei Chen*, Weikai Chang*, and Pratik Chaudhari2024We exploit a formal correspondence between thermodynamics and inference, where the number of samples can be thought of as the inverse temperature, to define a "learning capacity” which is a measure of the effective dimensionality of a model. We show that the learning capacity is a tiny fraction of the number of parameters for many deep networks trained on typical datasets, depends upon the number of samples used for training, and is numerically consistent with notions of capacity obtained from the PAC-Bayesian framework. The test error as a function of the learning capacity does not exhibit double descent. We show that the learning capacity of a model saturates at very small and very large sample sizes; this provides guidelines, as to whether one should procure more data or whether one should search for new architectures, to improve performance. We show how the learning capacity can be used to understand the effective dimensionality, even for non-parametric models such as random forests and k-nearest neighbor classifiers.

@misc{chen2024learningcapacitymeasureeffective, title = {<a href="https://arxiv.org/abs/2305.17332" target="_blank">Learning Capacity: A Measure of the Effective Dimensionality of a Model</a>}, author = {Chen, Daiwei and Chang, Weikai and Chaudhari, Pratik}, year = {2024}, eprint = {2305.17332}, archiveprefix = {arXiv}, primaryclass = {cs.LG}, url = {https://arxiv.org/abs/2305.17332}, }